Running in Production

Video

The pivotal step in your development journey will be deploying your assistant in production. In this chapter we are going to look into the key things you should know about this process.

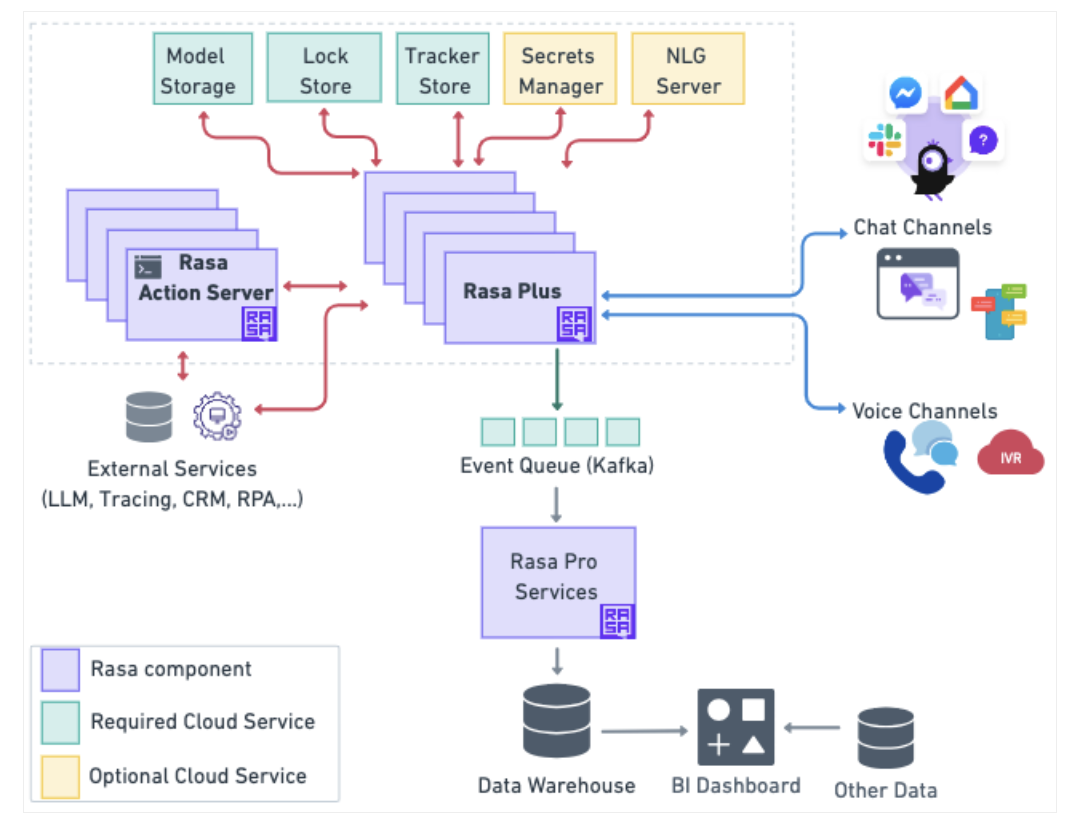

Rasa Pro Architecture

First, let’s have a look at the architecture of the Rasa Pro, it will make it easier to understand the key components and what services are needed to run them in production. Your deployed assistant will depend on a number of cloud services that you will need to monitor to make sure that you assistant operates successfully. Some of them are required, some of them are optional. For all of the components you have a number of cloud services you can choose from with the most popular being AWS, Azure and Google cloud services. Check out the Rasa Pro documentation for a more detailed list.

Let’s have a look at each of these components.

Model Storage

The Model storage is a cloud service where the trained model is stored. Models can be stored in different places after you trained your assistant. Upon initialization or restart, Rasa Plus will download that trained model and read it into memory. You have lots of different options of where the models can be stored - from dics, a remote server or cloud storage like Amazon S3, Google Storage and others.

Lock Store

The Lock Store is needed when you have a high-load scenario that requires the Rasa server to be replicated across multiple instances. It ensures that even with multiple servers, the messages for each conversation are handled in the correct sequence without any loss or overlap.

Tracker Store

Your assistant's conversations are stored within a tracker store. Rasa provides implementations for different store types out of the box, or you can create your own custom one. You have a lot of options of the out of the box tracker stores like SQL, Mongo, Dynamo or you can also build a custom tracker store.

Secrets Manager

The HashiCorp Vault Secrets Manager is integrated with Rasa to securely store and manage sensitive credentials.

NLG Server

The NLG Server in Rasa is used to outsource the response generation and separate it from the dialogue learning process. The benefit of using an NLG Server is that it allows for the dynamic generation of responses without the need to retrain the bot, optimizing workflows by decoupling response text from training data. Note that the NLG Server is an optional cloud service that you have to create and deploy yourself.

Event Queue (Kafka)

The Kafka Event Broker in Rasa is used to stream all events from the Rasa server to a Kafka topic for robust, scalable message handling and further processing. An event broker serves as a link between your active assistant and various external services that handle data from conversations. It sends messages to a message streaming service, often referred to as a message broker or message queue. This enables the forwarding of Rasa Events from the Rasa server to other systems.

Ways to deploy in production

One of the easiest ways to deploy Rasa assistants in a production environment and orchestrate all of these components is through container orchestration systems like Kubernetes (recommended) or OpenShift.

Here, Rasa gives you two options. If you aren’t familiar with Kubernetes, you can use Rasa’s Managed Service where Rasa’s team would take care of managing the Rasa Platform so you can move faster. Alternatively, you can use Helm chart which will significantly simplify the deployment process for you. Rasa’s documentation contains a detailed walkthrough of how you can deploy your assistant using Helm chart.

Continuous Integration and Continuous Deployment (CI/CD)

Finally, you should always have the CI/CD configured for your assistant. This will make sure that the you or your team members don’t introduce breaking changes to you assistant and that the process of updating your assistant doesn’t intervene with your assistant’s performance.

Continuous Integration (CI) is the practice of merging in code changes frequently and automatically testing changes as they are committed. Continuous Deployment (CD) means automatically deploying integrated changes to a staging or production environment. Together, they allow you to make more frequent improvements to your assistant and efficiently test and deploy those changes. You can find an example configuration of CI/CD using github actions.

There are many CI/CD tools out there, such as GitHub Actions, GitLab CI/CD, Jenkins, and CircleCI. We recommend choosing a tool that integrates with whatever Git repository you use.