Rasa is Always Improving

Video

Appendix

Let’s do a thought experiment. Let's consider an utterance and let's then try to figure out what the associated intent might be.

Word 1: "yes"

It feels like "affirm" might be a good intent for this one.

Word 2: "no"

This one is also pretty easy. It feels like "deny" might be a good intent for this one.

Word 3: "good afternoon!"

This one is very tricky. If a user were to say "good afternoon!" at the end of a conversation it might mean "goodbye". But if it's said at the beginning of the conversation it might mean "hello". This is quite the conundrum. Not only do we have utterances that can belong to two intents, these two intents also carry an opposite meaning.

Rasa is Always Improving

You can build an assistant using intents, but intents are fundamentally limited. Real language is much more flexible and expressive than what intents allow, so at Rasa we're researching methods to allow for intent-less conversations.

It's a good example to show, because it's an example of how Rasa is always improving. Rasa has a large, and active, research team that is continously improving how you can build assistants. We've recently released the intent-less end-to-end feature of Rasa. Before diving into how that works, it helps to explain our overall vision on how Conversational AI will evolve in the next few years.

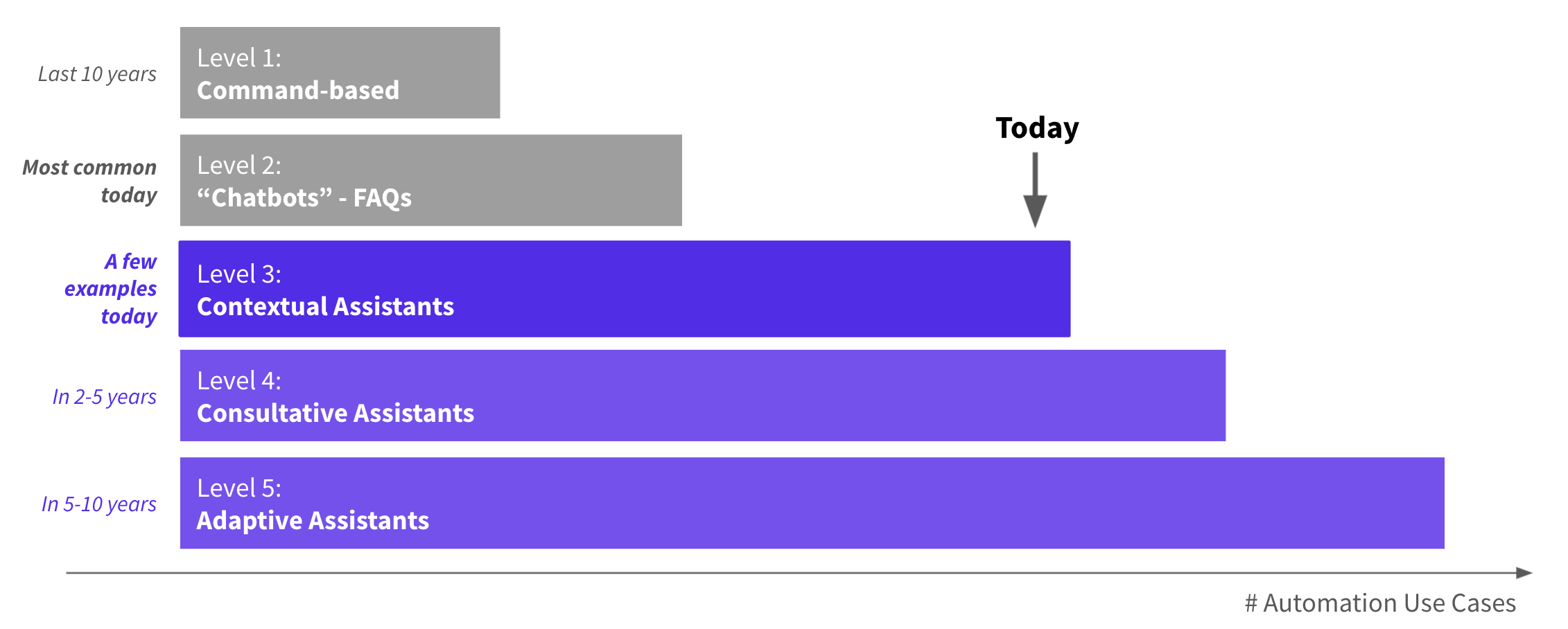

Five Levels of Conversational AI

It helps to think of the capabilities of virtual assistants in terms of "levels". Looking to the past and to the future, we think there are five milestones, which we call levels, that are worth obvsering. As we progress through the five levels, assistants become more accommodating of the way humans think, and feel less like an API endpoint.

We'll use the example of a mortgage assistant to explain the milestone that each level represents.

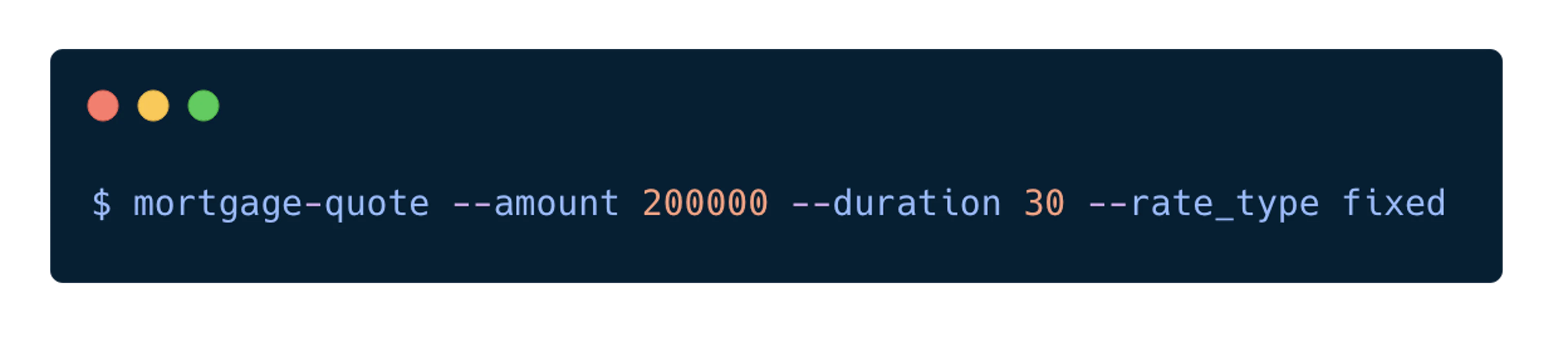

Level 1: Command Line Assistants

At level 1, assistants can still be helpful, but they put all the cognitive load on the end users. The end user needs to know exactly which input fields to provide and also needs to provide it in the way that the assistant expects. Many command line apps would fit the category.

It's not perfect, but still much more convenient than doing the calculations by hand.

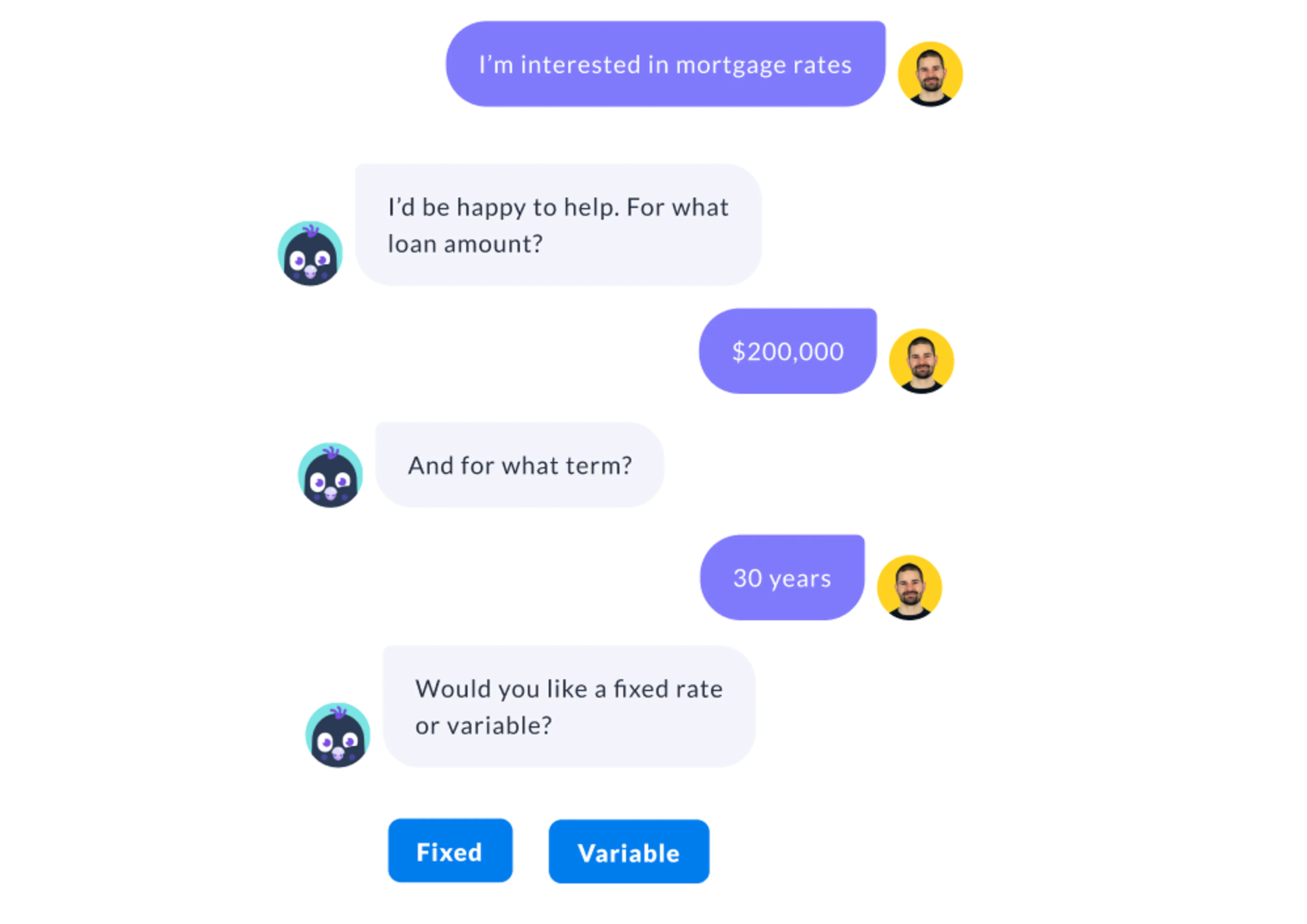

Level 2: Intent Based Assistants

At level two, we start to see less cognitive load on the end user. The assistant can try to detect what the user is interested in by detecting their intent but they can crash hard when a user deviates from the predefined "happy path". If the user is saying something that doesn't resemble an intent, the assistant will have a bad time.

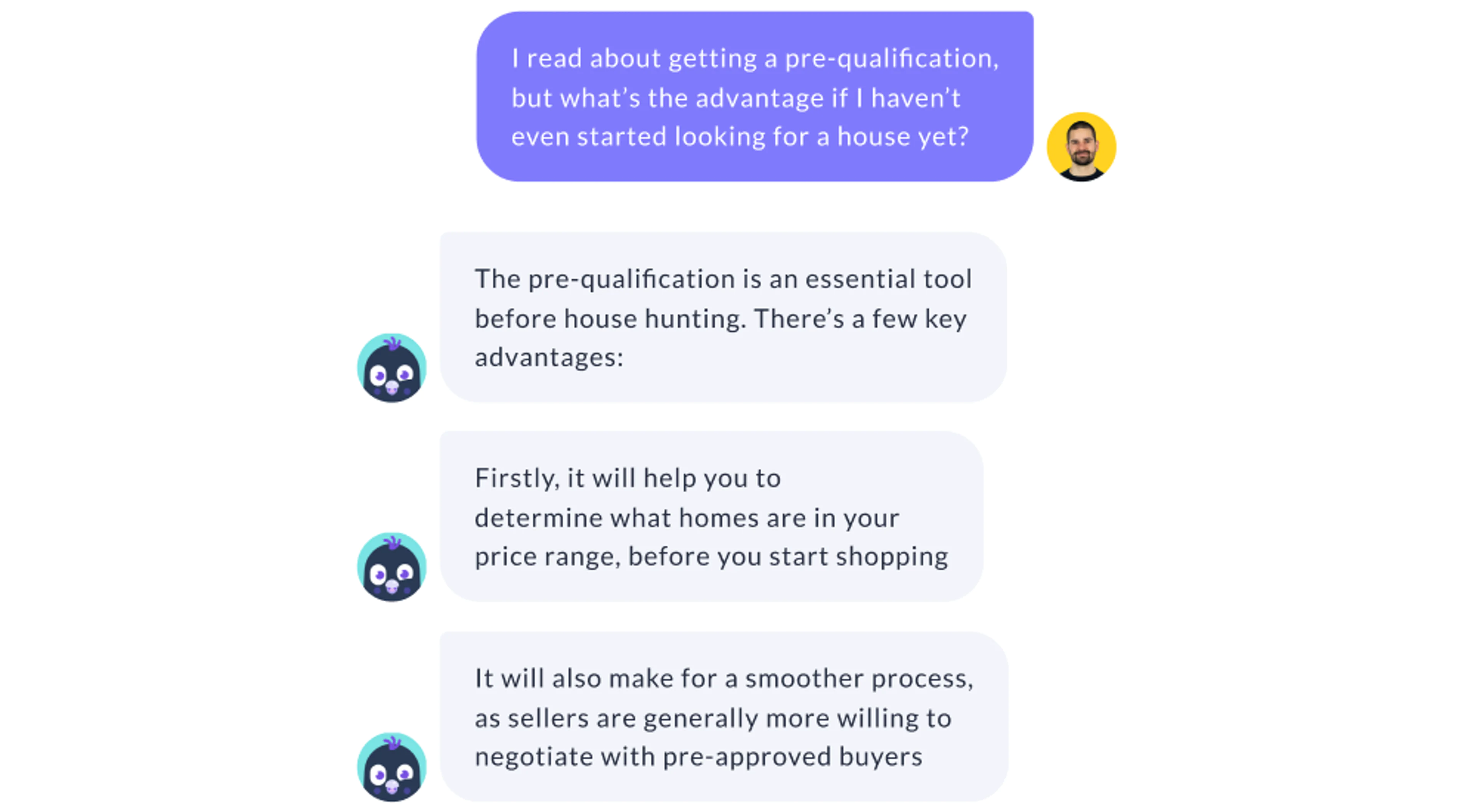

Level 3: Contextual Assistants

At level three, we start to see assistants that can deal with context. The user still has to know exactly what their goal is but they no longer have to know how to use the assistant to avoid breaking the conversation. This is great, because it means that the user can ask for assistance without breaking the flow.

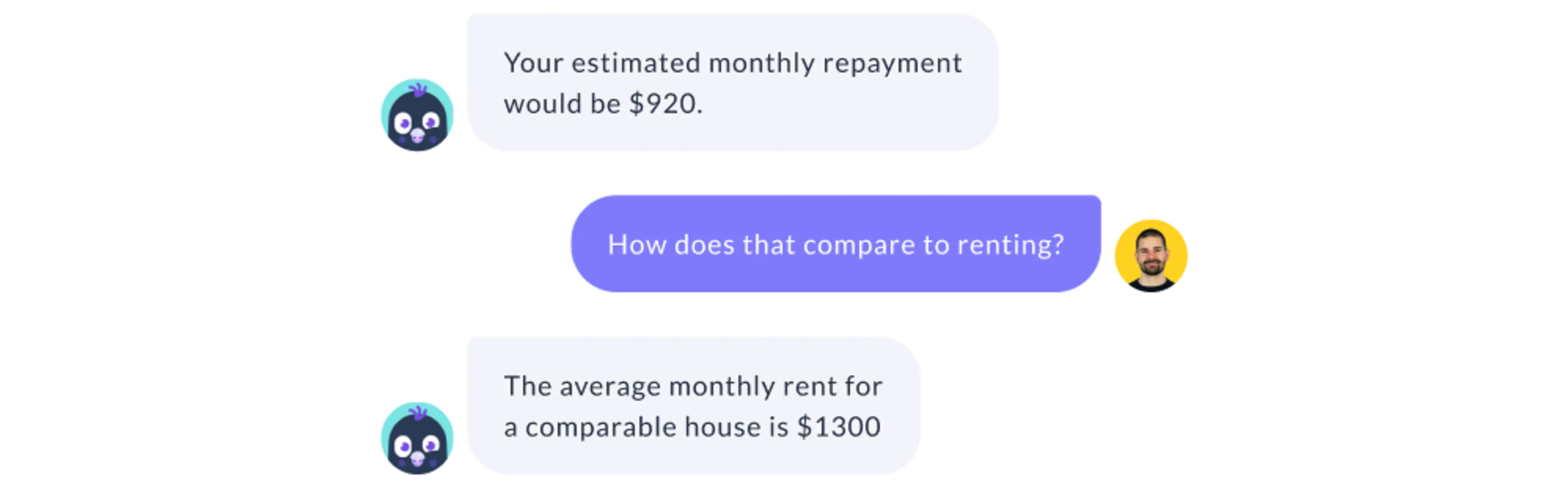

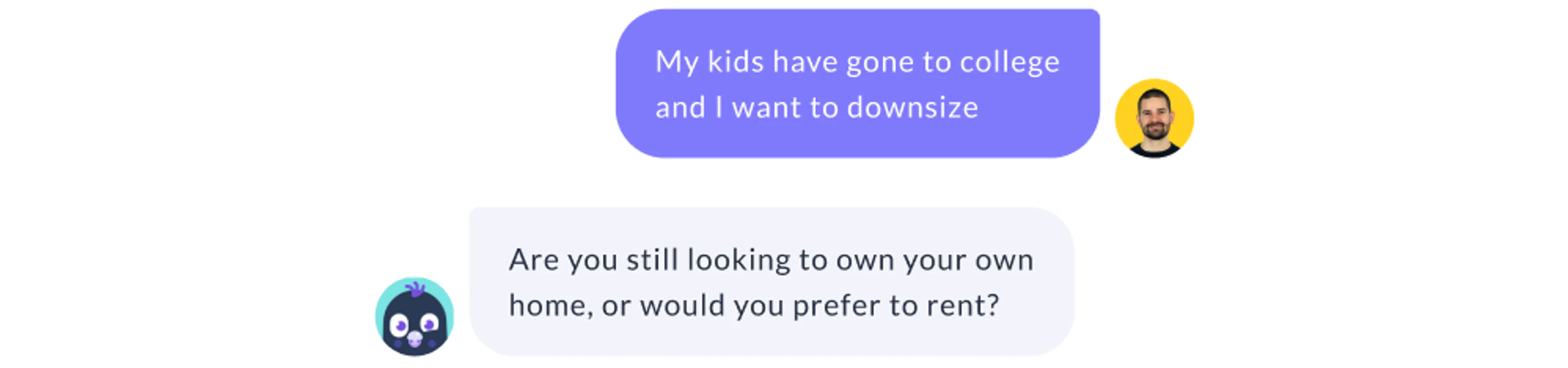

Level 4: Consulting Assistants

At level four, we start to see assistants that are able to adapt based on information that the user provides. Asking for a mortgage is different if you're downsizing because the kids have gone to college than if you've just gotten kids and need to upsize. It's the assistant's job to figure out how they can help, so they need to be able to figure out how to give the user exactly what they need.

Level 5: Adaptive Assistants

At level five, we start to see assistants that can adapt very quickly. The assistant needs to detect when it's missing an ability and it will take little effort from the conversational designer to add a new feature to the assistant.

Climbing the Ladder

At each level, we are lowering the burden on the end user, as well as the conversational designer, to translate what they want into the language of the conversational assistant. That's exactly where the value of conversational AI is.

End to End Learning

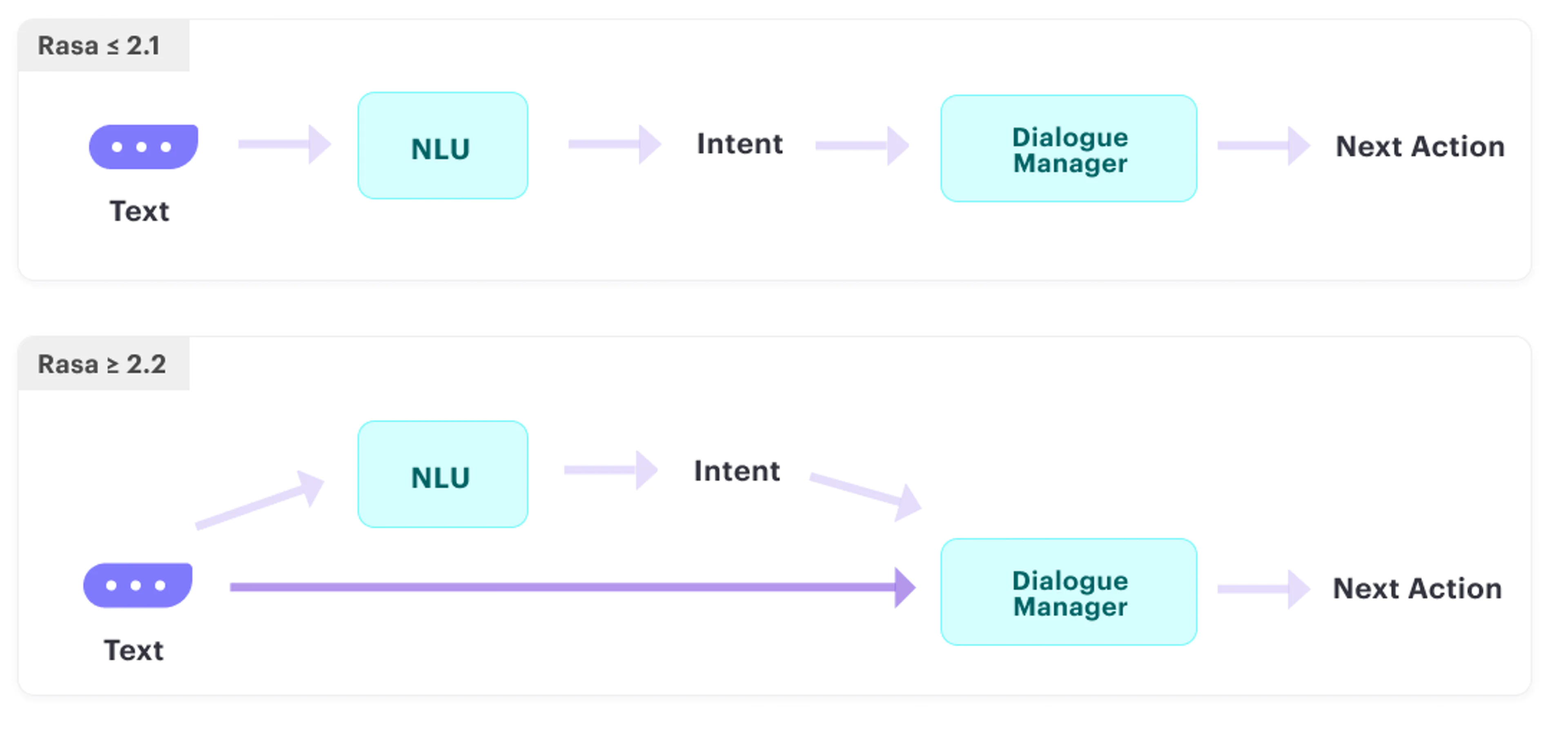

Right now, we believe that it's possible to create level three assistants with Rasa. As of Rasa 2.2, we've release the "end-to-end"-learning feature that allows you to train on utterances that don't belong to an intent.

Code

We'll now explain the end-to-end feature that we explain in the video. You can follow along, but you can also compare the standard project with the end-to-end project on Github.

If you want to have an assistant be able to handle utterances without

an intent, you'll mainly need to add utterances to your stories.yml file.

stories:- story: unhappy pizza path steps: - intent: greet - action: utter_greet - intent: recommend - action: utter_pizza - user: "I already had that yesterday." - action: utter_something_else

- story: unhappy pizza path steps: - intent: greet - action: utter_greet - intent: recommend - action: utter_pizza - user: "I'm allergic to bread/dough." - action: utter_something_elseIn this example we have stories from assistants that ask the user if they

are interested in buying a pizza. When a user says "I already had that yesterday."

we could interpret that as a "deny" intent in the context of the conversation

but it'd be a very strange example without the context. That's why these examples

are great examples for end-to-end learning.

The Future

The end-to-end learning feature is just one, of what will be many, improvements to Rasa. We need many incremental improvements to build the required infrastructure to build level five assistants, but we also need to enable every developer to push what’s possible with conversational AI. Not just the big tech companies.

We won’t know what improvements we’ll see over the next few years, but we have a strategy.

Open Source

The first part of our strategy is open source. Open source code makes a field progress much faster than keeping it behind bars. Building level five assistants is hard, and it’s not like we at Rasa have all the answers. Developers regularly hack things into our open source framework for their own purposes and it’s during this hacking that new discoveries get made. Anyone can extend our infrastructure and put their new idea to work. As an example, there’s a small community of developers in China making Rasa components to work well for Chinese but there's many side projects that offer NLU components for Non-English languages.

Another benefit of the open source ecosystem is that Rasa can plug in to existing tools as well. Rasa natively support spaCy and huggingface and any improvements to these tools will also cause an improvement to the Rasa ecosystem.

Research

The second part of our strategy is that we heavily invest in research. We have a large research team and their work always ends up in Rasa’s codebase. Rasa contributes in two ways though: we do our own research on the approaches we believe will get us to the next level, and we look for the most meaningful breakthroughs and find a way to make our community benefit from them. Many of the first versions of these research projects are done out in public so that our community can collaborate and give us feedback.

Community

This brings us to the third part of our strategy: our community. As a company, we’re not going to come up with every idea that moves the technology stack forward. We invest in a global, friendly community of developers that exchange ideas. We’re actively investing in our developer education but we also host a forum that is actively being maintained by everyone in the company. It’s also the place where many Non-English techniques and tools are discussed.

Machine Learning is not a niche field anymore and is becoming a part of standard software engineering. So we need to make sure that our technology stack is understandable for developers. That's why we also invest a lot in educational material. We offer a lot of courses for free in our learning center but you can also find many educational resources on our Youtube channel.

Feel free to explore these resources and let us know on our forum if you have any questions.

Thanks for Listening!

We hope you enjoyed this series of videos.

- Rachael, Juste & Vincent